If you just want to see the final code, scroll about half way down, otherwise stay on for a bit of a babble first... 📣

On the whole, FlatPress is not terrible in terms of performance and I haven't had many issues with it. However lately I have been noticing some stability problems as my Blog increased in popularity and the number of posts grew. Things like corrupt indexes, resetting post view counts, slow response times, etc. And then, I wrote a story which catapulted my traffic volumes to 50x their usual. And my server went down. Not once, multiples times, there were several hours of excruciatingly slow page loads and people resorted to using Archive.org copies of my story. That's when I knew I had to do something.

Caching Generated HTML

My first thought was to use memcache to keep generated HTML pages in memory and serve those instead of generating HTML every time a blog post was requested. Simple idea, but I didn't want to go through the motions of setting up memcache in MAMP. So I fell back to using APC as it required no setup and I knew that I could enable the APCU extension on my webhost.

Looking at index.php, I could see that the Smarty::display() method was used to render and output the content of a page/post. Simple but effective and I thought I would be done with this very quickly.

index.php

function index_display() {

...

$smarty->display($module);

...

}

The trick was of course to switch to the Smarty::fetch method, cache that output, and serve cached output as required. The cache key was based on $_SERVER['REQUEST_URI'].

Well that approach worked. In a way. However, it only worked in the case where I was caching blog post pages. Everything else like category listings, the home page, sitemap, etc were only partially cached. For some reason only part of the HTML was being generated by Smarty and the rest - well it was being sent to the browser from some other part of the code (I didn't track down where exactly, I did look and what I found were horrors so I stopped looking 😅).

Adding a Cache Handler to Smarty

So then I decided to try a different approach by implementing a Smarty Cache Handler that would work transparent to FlatPress and at least in theory I would not have to worry about the rest of the code base. Well the cache handler worked...for blog post pages only. This indicated that FlatPress was doing something bad deep inside its core. Back to square one.

All fails - What did I really want to achieve?

At this point I spent way longer than I initially projected (or wanted) to spend on this enhancement. I had to evaluate what I really wanted to do and that came down to just two statements:

- make the most minimal change possible

- speed up only the most popular content

Addressing both the points in one hit I decided that I didn't need to be able to cache everything that FlatPress generated. I only needed to cache the most requested blog posts. This meant that I could use code from my first attempt! ...it just needed a couple of checks added to it.

Lets see some code!

So I ended up implementing something like this in place of the original index_display() method (with all my other customisations removed for brevity)...

index.php

function index_display() {

global $smarty, $fp_config;

$loggedIn = user_loggedin();

$cacheKey = 'ikb' . $_SERVER['REQUEST_URI'];

$content = apc_fetch($cacheKey);

if ($content === false || $loggedIn) {

$module = index_main();

$content = $smarty->fetch($module);

if (($module == 'comments.tpl' || $module == 'single.tpl') && !$loggedIn) {

apc_store($cacheKey, $content, 3600);

}

unset($smarty);

do_action('shutdown');

}

echo $content;

}

The logic is extremely simple. First a check is performed for missing cache data or whether an admin user is logged in. When both of these conditions fail i.e. we have data and a user is not logged in, the cached data is simply returned to the browser. When either of these conditions are met however, we process the request and generate a page using Smarty. Then, if the request was for a blog post (module is comments.tpl or single.tpl) and we are not a logged in user, we cache the result. The result is also then returned to the browser.

Easy right?! However, we need to think about what to do when a blog post is updated. Obviously the cached entry, if any, needed to be cleared. However after digging around the code I could not find an easy way to generate the same cache key on the admin side as in the index.php file. This was because I was using the 'Pretty URLs' plugin which rewrote URLs from internal IDs to something easier to read. Also I didn't want to write too much more code here. So I did the naive thing - flush the entire cache whenever any blog post is updated! 😎 It's like using a sledgehammer to kill a fly, but it was minimal code change and was guaranteed to work whether the 'Pretty URLs' plugin was being used or not. This required one line to be added to the onsave() function in admin.entry.write.php...

admin/panels/entry/admin.entry.write.php

function onsave($do_preview = false) {

apc_clear_cache('user');

...

Still that wasn't the end. I was using the PostViews plugin which counted the number of views a blog post received. Because this plugin was never triggered for cached posts, view counts were not updated. Boo. 😞

I actually addressed this kind of scenario way back when I modified PostViews to use a tracking pixel. Back then, I found that approach didn't work very effectively so wasn't using that change any more. Still I needed to do something here and since I already had a solution that half-way worked I figured that instead of a tracking pixel, I could use an iframe. This would get past any image caching issues I experienced in the past and as a bonus would actually reduce traffic (even a single pixel PNG is much larger than what I send in an iframe).

So based on my tracking pixel PostViews plugin, I made these changes...first the plugin_postviews_track() method was replaced with...

fp-plugins/postviews/plugin.postviews.php

function plugin_postviews_track($content) {

global $smarty;

$id = $smarty->get_template_vars('id');

$shortId = shorturl_toBase(str_replace('-', '', str_replace('entry', '', $id)), 62);

return $content .

'<iframe src="/'.PLUGINS_DIR.'postviews/v.php?v='.$shortId.'" width="1" height="1" style="border:0; display:block;"></iframe>';

}

...and in v.php the block of code after the "// output 1x1 transparent tracking pixel" line became this...

fp-plugins/postviews/v.php

/* output a single character page with caching turned off */

header("Cache-Control: no-cache, no-store, must-revalidate"); /* HTTP 1.1. */

header("Pragma: no-cache"); /* HTTP 1.0. */

header("Expires: 0"); /* Proxies. */

echo "#";

The idea here is that I append a 1x1 pixel iframe to the blog post which triggers the post views plugin to increment the post count. This is not ideal, but since I was going for a quick and easy solution it worked well. (Ideally post views should be recorded by the core system, not something that is external to a page.)

That was the extent to my changes. Really not a huge amount of code! The biggest time sink here was in trying to figure out why FlatPress wasn't playing nice with template output. That's still a mystery which I will leave for the FlatPress team.

The results

So how do I know that this actually works? Well by chance I wrote a post a week after these changes went live. That post attracted over 120x the usual amount of traffic. This was more than double my previous record and I was sure the server would go down again. It didn't!

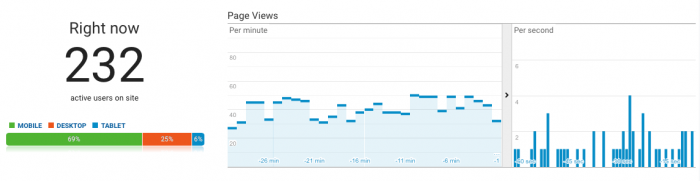

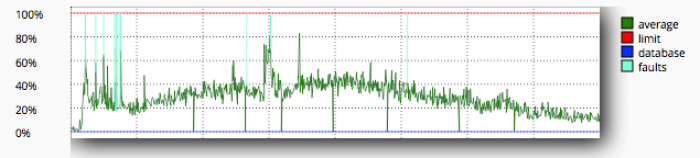

At the peak of it there were more than 200 concurrent users with around 4-10 requests per second (that's more than 340k requests per day). The CPU usage remained at an average of around 30%. I was happy with this. There were a few faults over the 24 hour period but on the most part the server remained stable.

Now this is nowhere near comparable to what the very large blogs and news websites would be getting on a daily basis but considering that FlatPress couldn't handle half this load before these hacks were implemented, it's a decent improvement. My estimate is that FlatPress without these changes would cap out at around 1 request per second, which still allows to serve a lot of requests (86k per day), but when a story becomes popular and you have many users trying access it, out of the box FlatPress runs out of steam.

Lessons learned

The #1 take away from this is - cache as much as you can and avoid unnecessary processing in web applications. This becomes especially important when hosting applications in services like App Engine where you are billed based on resources you use.

And #2 is - even small, 'hackey', changes can have huge positive impacts and you should not shy away from them under the right circumstances.

I hope the FlatPress team can take this and make it part of of the core system. I won't be taking this task on because I've decided to move to a different system, and I'll have more to write on that later.

-i